The Rise of Context Engineering

What’s holding back AI isn’t model size, it’s comprehension. And comprehension requires context.

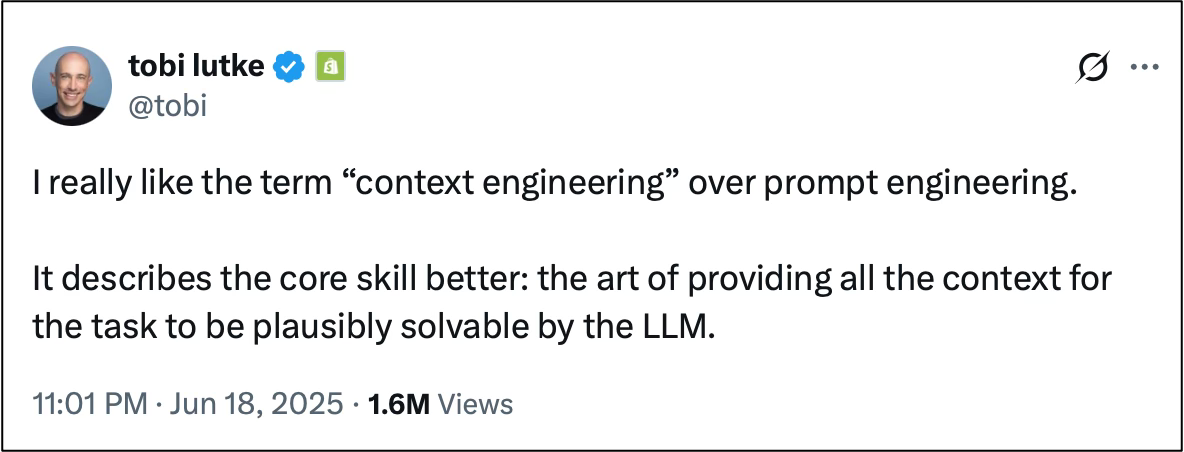

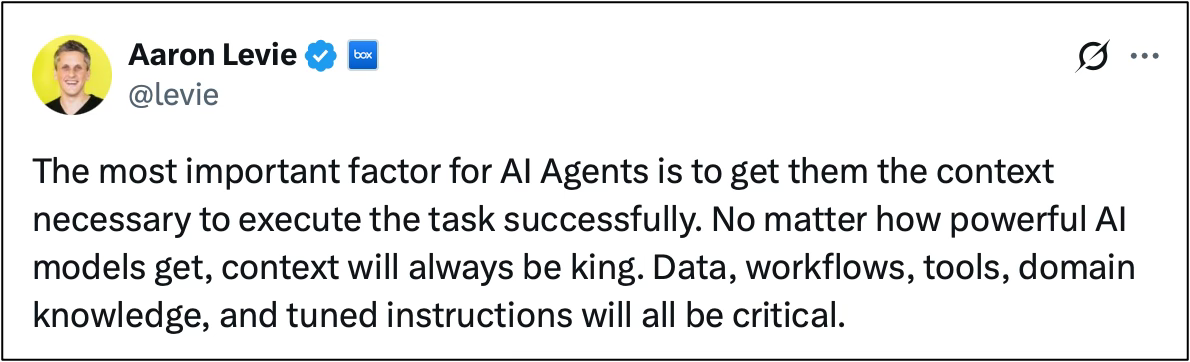

When Tobias Lütke recently tweeted that “context engineering” is a better term than prompt engineering, he wasn’t nitpicking language, he was pointing to AI’s core challenge: getting models to behave correctly depends on context. Or as Aaron Levie put it: “No matter how powerful AI models get, context will always be king.”

Both Tobi and Aaron are right: What’s holding back AI progress isn’t model size, it’s comprehension. And comprehension depends on context.

Even the most powerful models from OpenAI, Anthropic, Google and Microsoft can’t reason reliably without sufficient context: information like memory (what’s happened in the past), state (what’s happening right now), and environment (where the interaction is taking place and under what conditions). Without this information, models don’t have the context they need to deliver.

What Is Context, Really?

Context helps “situate” a model by giving it what it lacks: awareness of where it is, who it’s engaging with, and what actually matters in the moment. But context isn’t just extra instructions appended to a prompt, it’s data and signals integrated from other systems that help the model behave appropriately in the moment, like:

Domain context: the user’s industry, jargon, and expectations

Task context: the objective, constraints, and relevant inputs

Data context: what’s been said already, what’s been done before

Environmental context: times and locations, the urgency and current state

Human context: preferences, history, and mentality

Some examples of context:

In customer support, an LLM needs more than company policy (rules, procedures, and guidelines), it needs access to customer’s past ticket history, the tone of the last message, and the likelihood that the user will escalate.

In sales, a model can only help if it sees CRM details like deal status, recent activity, assigned rep, prior emails, preferences, and funnel stage.

In software development, a Copilot-style assistant needs access to open files, code history, unresolved bugs or tickets, and awareness of the developer’s coding style.

In other words, LLM intelligence isn’t just based on data the model has seen during training, it’s about how well the model is “situated” in a specific moment, task, or user interaction. Without this situational understanding, LLMs are likely to hallucinate, misread intent, or offer advice that’s technically correct but contextually useless.

These contextual details can’t be added in a prompt, they come through integration with external data sources, decisions systems, and algorithms that enable the model to understand the context so it can respond correctly.

Context Engineering: The Next Core Skill

Aaron Levie also framed context as strategic: “Data, workflows, tools, domain knowledge, and tuned instructions will all be critical.” He wasn’t talking about implementation best practices, he was describing context as a competitive advantage. When done right, it becomes hard to copy.

As language models continue to commodify, the real differentiator is how well they perform in real-world use. Real-world performance comes down to how deeply they’re integrated into workflows, how easily they adapt through fine-tuning, and how reliably they manage safety and alignment.

So what does this all mean for builders? It means the real value isn’t in prompting. It’s in architecting systems that continuously feed the model the context it needs. It’s in designing:

Memory architectures

Data access layers

Workflow integrations

State tracking

Role and identity awareness

Real-time context updaters

This is context engineering. And as Tobias Lütke rightly put it, it’s becoming the most important AI skill to learn.

LLMs don’t need to get bigger. They need to understand.

In a previous piece, I explored how lightweight psychological signals improve model outputs. In experiments, a compact vector representing seven such signals led to sharper, safer, and more aligned responses from models like GPT-4o, Gemini, and Copilot. It’s just one example of how subtle context signals (when well-integrated) can sharpen model behavior.

The takeaway here is that the more relevant context a system has access to, the more accurately situated the model is in the moment, and the better the model performs. The more context a system captures, the more the model behaves like it actually understands what it’s doing.

This shift to context-centric AI is already underway. You can see it in GPT-4o’s ability to ingest screen state, Anthropic’s push for memory and values alignment, Google’s integration of Gemini into Workspace tools, and RAG systems built to inject data into prompts. Clearly, the leaders recognize that the most effective models will be enabled by the richest, most relevant context, delivered at the right time.

Context is what transforms generative AI into adaptive systems, tools that don’t just generate language, but understand situations, support decisions, and align with human needs.

Want to share ideas, use cases, or context engineering ideas? Let’s connect.