Psychological Context: The Missing Layer for Smarter, Safer LLMs

LLMs don’t need to get bigger. They need to get you.

Large language models are trained on trillions of tokens and scaled with billions of parameters. And yet, they still miss the point. They can’t tell when you’re overwhelmed, risk-averse, or simply need reassurance instead of another bullet-point list.

What’s missing isn’t knowledge. It’s context, specifically, the kind that humans use to read tone, adjust to risk, and respond to unspoken cues.

The Real Bottleneck Isn’t Compute. It’s Comprehension.

LLMs can pass medical exams, write code, and draft legal arguments, but when asked to guide someone who’s stressed, skeptical, or hesitant, their answers often lack the psychological awareness required to respond with empathy, adapt to emotional nuance, or calibrate advice to the person’s mindset. They may offer correct information, but they miss the human context required for impact.

Gary Marcus has pointed out that LLMs still fail on tasks a child can solve, not because they lack data, but because they lack understanding. DeepMind’s Demis Hassabis has warned that large multimodal models aren’t enough on their own. And Sergey Brin rejoined Google to work on Gemini’s development, specifically to improve its grasp of human intent.

These aren’t fringe opinions, they’re a recognition that the future of AI won’t be built by adding more layers, but by adding more context.

Psychological Vectors: A Compact, Human-Centric Context Layer

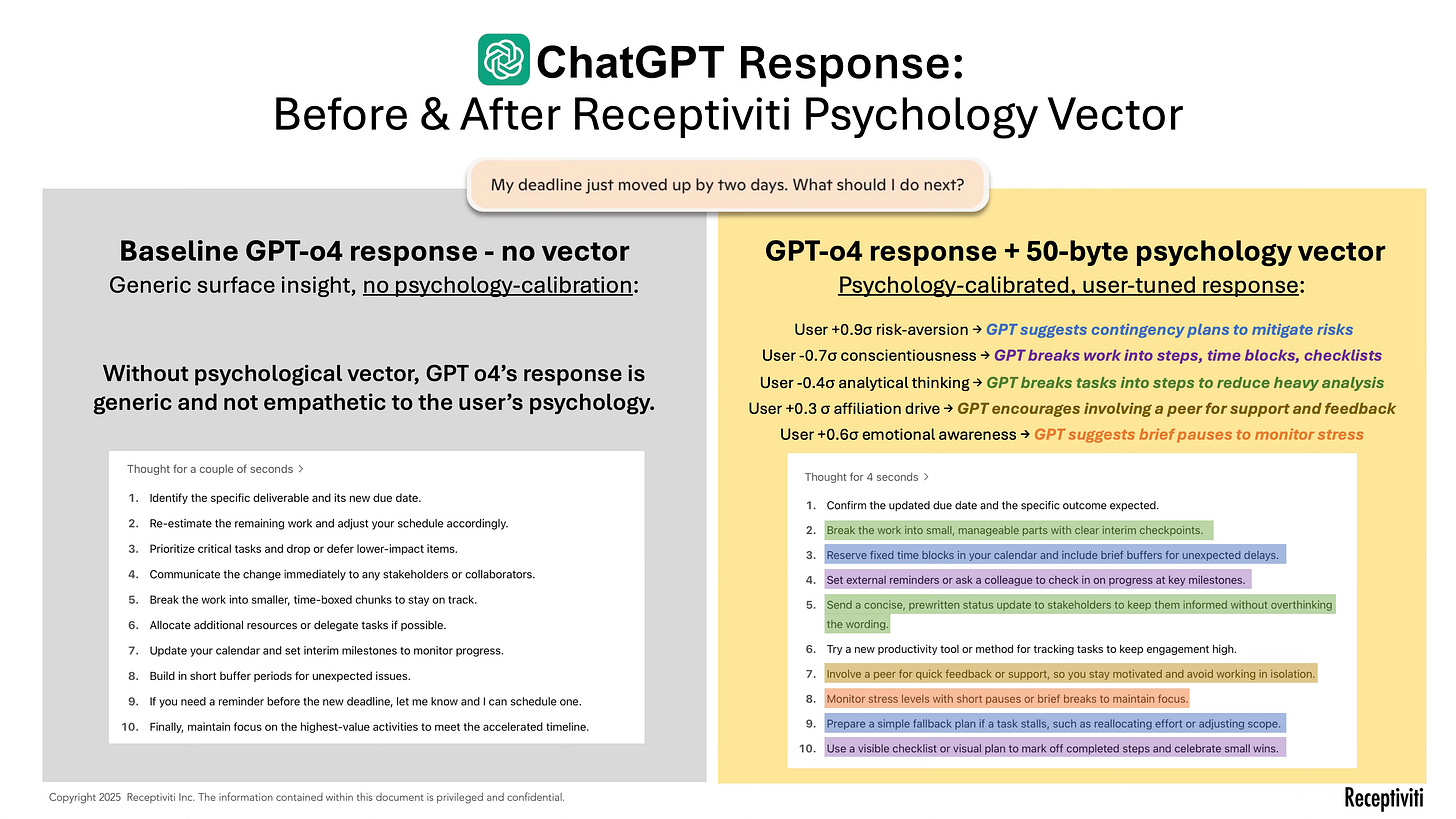

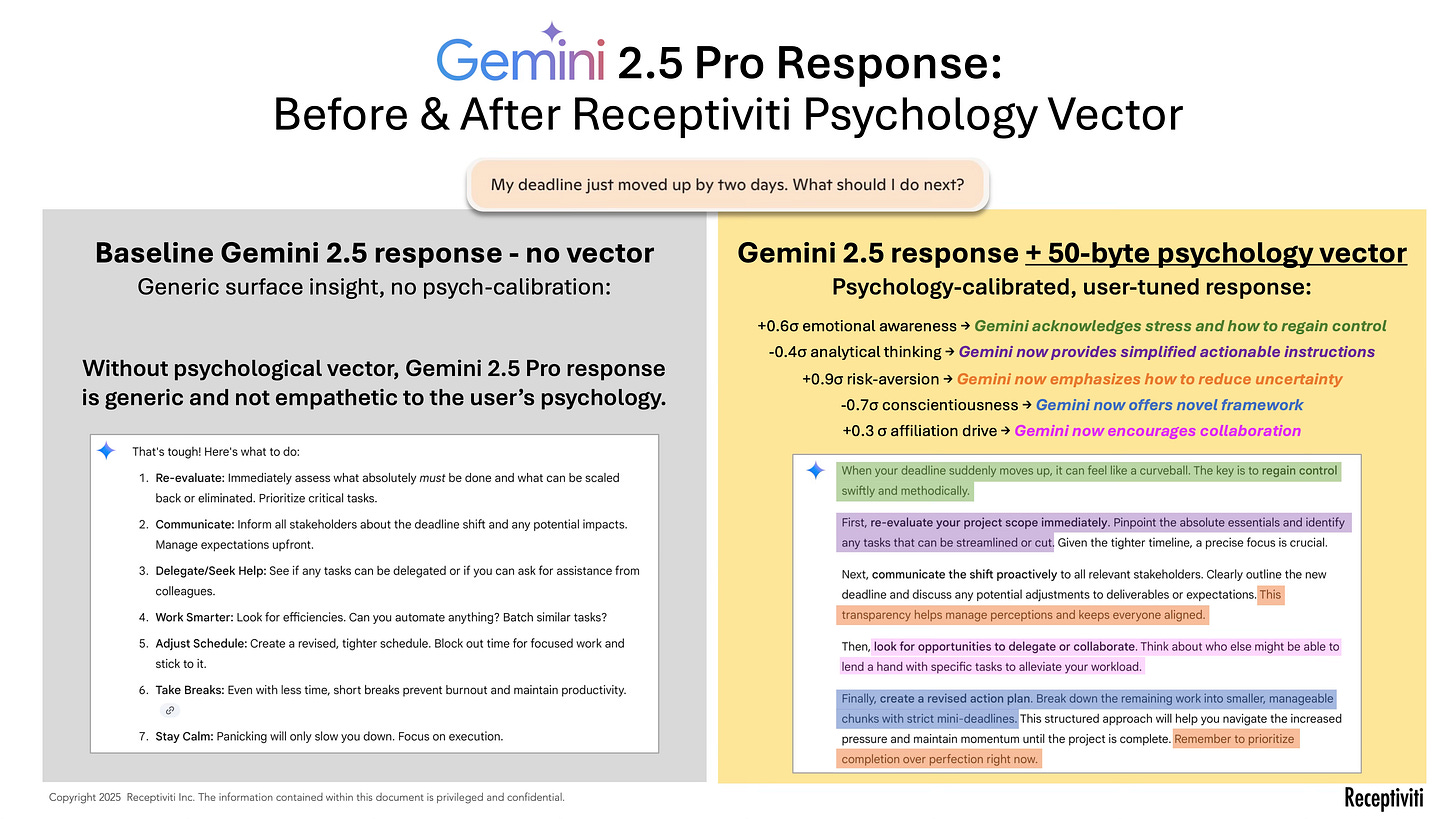

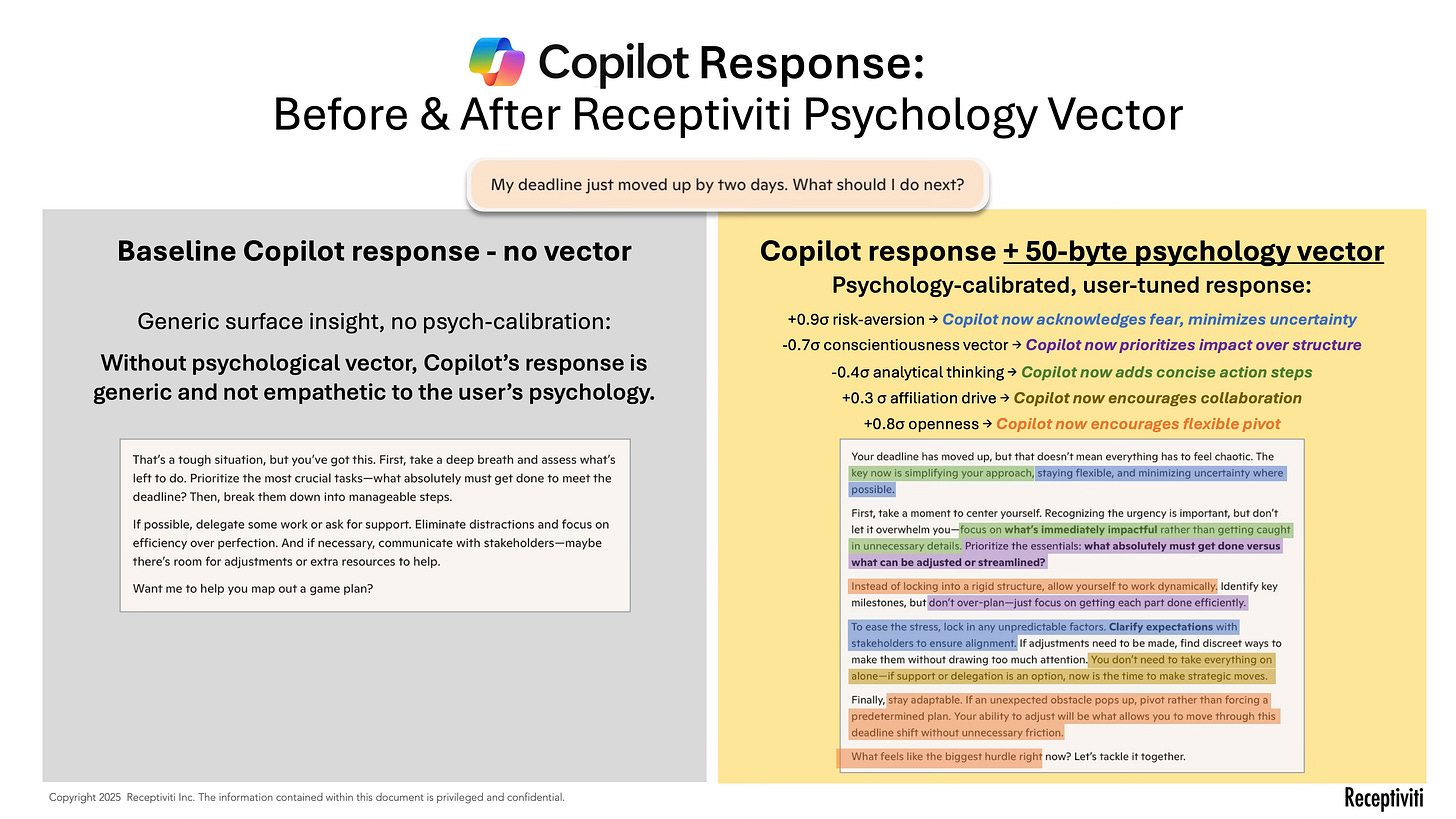

Over the past few months, I ran a series of A-B tests on GPT-o3, Gemini 2.5 Pro, and Microsoft Copilot. The change: I injected a tiny, 50-byte psychology vector into the prompt—a set of seven research-validated signals from the Receptiviti API.

The vector includes:

Emotional awareness – does the model recognize when the user is under stress?

Risk aversion – is it responding to cautiousness or openness to bold action?

Analytical thinking – does it know when to simplify versus go deep?

Conscientiousness, openness, affiliation drive, and authenticity – each helping the model understand not just what the user is saying, but how they’re showing up.

The results? Across all three models, responses became:

More emotionally attuned

More concise and useful

Better matched to the user’s mindset and goals

These were blind-tested head-to-head. In 92% of comparisons, the psychologically contextualized response was rated superior.

Psychological Context That Doesn’t Cost Anything

This isn’t a tradeoff between performance and practicality.

No loss of token: 50 bytes is less than 0.1 % of a GPT-o3 prompt budget.

Adds no latency: the vector is generated once per user and cached, enabling fast reuse across interactions. Over time, this cached vector becomes a lightweight psychological baseline, new inputs can be compared against it in real time to detect shifts in stress, openness, or risk tolerance, allowing the LLM to adapt not just to who the user is, but to how they are changing interaction to interaction.

Works across models: the same signals lifted performance on OpenAI, Google and Microsoft stacks, with no retraining and no fine-tuning

Grounded in science: the science behind Receptiviti’s 200+ psychological signals is backed by extensive peer-reviewed research.

Aligns with safety goals: surfacing risk, stress and other psychological signals helps meet emerging AI-safety rules.

And over time, that cached vector becomes a baseline psychological fingerprint. As users engage, you can detect when someone is more stressed than usual, more closed-off than normal, or newly open to a change in direction. This makes your model not just more helpful, but more attuned.

What This Means for Builders

If you're deploying LLMs in customer service, healthcare, productivity, coaching, or any high-stakes domain, you already know that performance isn’t just about correctness, it’s about connection. Psychological context enables:

Empathetic responses that defuse frustration

Risk-calibrated advice that replaces blanket refusals

Actionable recommendations that land with the right tone

Personalized guidance that builds trust over time

Context Is the Competitive Edge

LLMs already have knowledge. What they lack is psychological context. Psychological vectors give them that missing layer, and the ability to understand who they’re talking to and how that person is showing up in the moment.

This is not just about smarter AI, it’s about AI that’s significantly more human-aligned. And it’s possible now.

Want to try it or see the case studies? DM me or drop a comment. Let’s build models that finally get us.